The search for iptv player windows in 2026 is usually not about “finding channels” — it’s about getting reliable playback on a Windows PC: quick start, smooth switching, low buffering, and a setup that behaves consistently across multiple devices. This guide stays strictly DMCA-safe by focusing on IPTV as a delivery technology and operational system (network quality, monitoring, uptime, and scalability), not content promises or lists.

Table of Contents

What “IPTV Player Windows” means in 2026 (and what it doesn’t)

When people type iptv player windows, they are usually looking for a practical answer to one of these questions:

- “What’s the most stable way to play IPTV streams on Windows?”

- “How do I avoid buffering and random drops on my PC?”

- “Can I use Windows as a hub while also watching on TV and mobile?”

- “What should I check first: my device, my network, or the service?”

In other words, the intent is often transactional (make a good decision and avoid a bad one): users want a trusted path to stable playback. That’s why this article leans into professional concepts like QoE (Quality of Experience), monitoring, redundancy, and uptime — because those factors explain why some setups work consistently while others fail under real-world conditions.

DMCA-safe boundary (non-negotiable)

This article is written to stay clean and compliant:

- IPTV is a delivery technology; legality depends on licensing and jurisdiction

- No “free/unlock/watch everything” language

- No channel lists, no entertainment brand references

- No bypass methods or instructions that enable infringement

- We focus on performance, infrastructure, and operations

Quote (operations mindset): “Reliable playback isn’t a feature — it’s the result of a system that’s measured, maintained, and designed to degrade gracefully.”

Why “IPTV Player Windows” playback is different from TV boxes

A Windows PC can be the most flexible way to run an IPTV player, but it can also be more sensitive to the real world than a dedicated living-room device. In 2026, the biggest difference is that Windows sits inside a complex environment:

- The PC runs multiple apps at once (browser tabs, calls, background updates)

- Drivers, power settings, and background processes can change performance

- Wi-Fi conditions vary more than people expect, especially in apartments and offices

- Security tools and network policies can affect streaming behavior

A dedicated Android TV box often wins for “always-on simplicity,” but Windows wins for:

- multi-monitor control

- fast troubleshooting and testing

- business workflows (meeting rooms, desks, signage-like scenarios)

- keyboard/mouse precision and logging/diagnostics

That’s why the best approach is not “Windows is better” or “boxes are better.” The right choice depends on whether you need control or appliance-style reliability.

Quick decision: iptv player windows vs Android TV box (2026)

If you want the “Best Good IPTV Boxes in 2026 (Fast, Affordable & Reliable)” angle to match iptv player windows intent, the clean way to do it is to compare roles:

- Windows player = control station + diagnostics + flexible workflows

- Android TV box = stable living-room endpoint + consistent UX

Windows vs box scorecard (2026)

| Requirement (2026) | Windows IPTV player | Android TV box |

|---|---|---|

| Fast startup and switching | Strong (depends on PC health) | Strong (appliance-style) |

| Multi-device coordination | Excellent (Windows as hub) | Good (per-endpoint) |

| Troubleshooting power | Best-in-class | Limited |

| “Always-on” simplicity | Moderate | Excellent |

| Updates & maintenance | More variables | More predictable |

| Business/hospitality workflows | Strong with policies | Strong with managed devices |

Practical takeaway:

Choose Windows for IPTV Player Windows when you want control, testing, multi-screen, and a “hub” device. Choose an Android TV box when your priority is living-room reliability with minimal maintenance.

A trusted intent-to-solution map (what you’re really trying to solve)

This map keeps the conversation professional and avoids risky framing for IPTV Player Windows. It helps you translate a vague search (“iptv player windows”) into a clean, measurable decision.

Intent → solution map

| What the user wants | What it really means | Best-fit approach | What to measure |

|---|---|---|---|

| “No buffering” | stable network delivery + player resilience | Windows for diagnostics, box for endpoint stability | jitter, packet loss, rebuffer rate |

| “Fast, smooth playback” | low start delay + stable bitrate | Windows with stable hardware acceleration | startup time, bitrate stability |

| “Works on all devices” | consistent UX across TV/PC/mobile | Windows as hub + Android TV endpoints | multi-device concurrency |

| “Reliable for business” | predictable operations + supportability | managed Windows + controlled network | incident repeatability, support response |

| “Hospitality use” | uniform behavior across rooms | controlled endpoints + monitoring mindset | uptime, device inventory stability |

Use cases (2026): home power users, businesses, hospitality

Home power users (multi-device households)

IPTV Player Windows Power users often have 3–6 devices competing for the same network: TV, phones, tablets, laptops, consoles, smart devices, and remote work. In this world, Windows becomes the “control station” — not necessarily the only screen. A clean strategy is:

- Use Windows to test behavior (is it the network or the TV endpoint?)

- Run your most stable endpoint on an Android TV device for the main room

- Keep mobile as a quick comparison device (same Wi-Fi vs different network)

What makes this professional is that you stop guessing and start isolating variables:

- Does buffering appear only on Wi-Fi?

- Does it happen only at peak hours?

- Does it happen only on one device type?

That’s how “iptv player windows” becomes a decision tool, not a gamble.

Businesses (offices, waiting rooms, meeting areas)

Business environments are less forgiving. The goal is not “maximum features,” but predictability. The strongest setups share these qualities:

- controlled update behavior (you don’t want surprises)

- stable wired networking where possible

- clear support expectations (a real diagnostic flow)

This is exactly where enterprise mindset matters: you are building a small service, not “trying an app.”

Hospitality (multiple rooms, many endpoints)

Hospitality turns small issues into big ones because problems scale. The most important difference here is operational:

- device inventory matters

- update cycles must be controlled

- the network must be designed to avoid one room impacting another

Windows can still be useful — often as a central management and testing station, while endpoints are simplified for guests.

Trust signals that reduce risk and improve stability

If you want fast indexing and long-term trust, the content must show how reliable systems behave. These are the trust signals that matter (and they also help you avoid low-trust clustering):

1) Monitoring mindset (even for non-engineers)

Monitoring doesn’t have to mean complex dashboards. It means the service and the user can answer:

- What changed when it started failing?

- Is the issue repeatable at the same time?

- Is it device-specific or network-wide?

2) Redundancy language (without hype)

Redundancy is the difference between “it fails hard” and “it degrades gracefully.” In practice, it shows up as:

- fewer peak-time collapses

- faster recovery from partial issues

- stable switching even under load

3) Support clarity (SLA-style, calm)

A trustworthy support process sounds like:

- “We’ll isolate device vs network vs service layer”

- “We’ll ask for a time window and reproducibility”

- “We can explain what we saw and what we changed”

A low-trust support process sounds like vague reassurance without diagnostics.

Mini case study (Windows-first): fixing instability without chasing myths

A home user runs iptv player windows on a desktop and reports “random buffering.” They also have an Android TV device in the living room. The first assumption is “the service is unstable,” but the layered check shows a different story:

- On Windows (wired Ethernet), playback is stable most of the day

- On TV (Wi-Fi), buffering spikes in the evening

- On mobile (Wi-Fi), the same evening buffering appears

Conclusion: not a “Windows player problem,” but a Wi-Fi congestion problem during peak hours.

The fix is not a new player. The fix is improving the delivery layer (network stability), which is the only change that improves all devices at once.

Lesson: When Windows is stable on Ethernet, it’s often the best truth source for isolating the real issue.

Where worldiptv fits (evaluation-first, not hype)

When you evaluate a service like worldiptv, keep it professional: treat it like a system you’re selecting for stability and multi-device consistency, not for content promises. A calm evaluation path is:

- test on Windows (control + diagnostics)

- validate on Android TV (living-room endpoint)

- check multi-device consistency (TV + mobile + browser)

If you want to review plan options as an evaluation model (not a push), use:

For deeper reading on IPTV as technology and operations:

And when you cover regional intent in your content, you can naturally include germany iptv and deutschland iptv as terms people use when they’re really asking for “works reliably in my environment,” without turning it into risky framing.

External context (infrastructure + market signals)

Enterprise-grade streaming reliability depends on the same foundations businesses use for networks: managed routing, predictable broadband behavior, and a market that continues to demand stable delivery experiences in 2026. These references help anchor that infrastructure-first framing:

https://www.grandviewresearch.com/industry-analysis/multi-protocol-labelled-switching-internet-protocol-virtual-private-network-market

https://www.oecd.org/en/publications/developments-in-cable-broadband-networks_5kmh7b0s68g5-en.html

https://www.fortunebusinessinsights.com/pay-tv-market-111551

Performance in 2026: QoE/QoS, Monitoring, Uptime, and “Enterprise-Style” Reliability

If iptv player windows feels “random” in 2026, the cause is rarely random. Stable playback is usually the result of two measurable layers working together:

- QoS (Quality of Service): how clean and consistent the network delivery is

- QoE (Quality of Experience): what the viewer actually feels (startup speed, buffering, smoothness)

This matters even more when people search terms like germany iptv or deutschland iptv, because the intent is often: “Will this be reliable in my real environment—apartment Wi-Fi, office networks, or multi-device homes?” The clean answer is: reliability comes from measurement + isolation + operational discipline, not promises.

QoE vs QoS (simple definitions that explain most problems)

IPTV Player Windows QoS is what the network is doing:

- latency (delay)

- jitter (delay variation)

- packet loss (missing data)

- throughput (capacity over time, not just a one-time speed test)

QoE is what you experience:

- how fast playback starts

- how often it buffers

- whether quality stays stable

- whether audio/video stays in sync

A key point for Windows users: you can have a “fast connection” and still get buffering if the connection is unstable (jitter/loss). Windows is often the best “truth machine” for diagnosing this because you can test more cleanly, compare Wi-Fi vs Ethernet, and keep notes.

Quote (operations mindset): “Speed is capacity. Stability is quality. Streaming needs both.”

The QoE metrics that matter for iptv player windows (2026)

“IPTV Player Windows” These are the metrics that predict whether playback will feel professional and consistent. Even if you don’t run dashboards, understanding these gives you a calm, reliable way to judge quality.

QoE metrics (what users feel)

- Startup time: how quickly playback begins after pressing play

- Rebuffer ratio: how often playback stops to buffer

- Bitrate stability: whether quality jumps up/down constantly

- Error frequency: player crashes, stream drops, repeated restarts

- A/V sync stability: whether audio drifts behind video

QoS metrics (what causes QoE failures)

- Latency: if it spikes, startup and switching feel slow

- Jitter: the silent killer—unstable delay triggers buffering

- Packet loss: small loss can cause visible interruptions

- Sustained throughput: steady delivery over minutes, not seconds

Metrics table (practical thresholds you can actually use)

The goal here is not perfection. It’s “good enough to be reliable,” and knowing what “bad” looks like.

| Metric | Layer | Why it matters | Healthy range (practical) | Red flag pattern |

|---|---|---|---|---|

| Startup time | QoE | first impression + usability | consistent and quick | slow only at peak hours |

| Rebuffer ratio | QoE | the #1 trust killer | near-zero in normal use | frequent short pauses |

| Jitter | QoS | causes buffer instability | low and steady | random spikes, especially evenings |

| Packet loss | QoS | leads to errors/pauses | ideally near-zero | micro-loss that repeats |

| Latency | QoS | impacts switching/response | stable is more important than “low” | sudden high peaks |

| Bitrate stability | QoE | quality consistency | mostly steady | constant up/down “pumping” |

| Error frequency | QoE | reveals fragility | rare | repeated reconnects/crashes |

How to use this table:

If your QoS is unstable (jitter/loss), your QoE will eventually suffer—on Windows, Android TV, mobile, all of it. Fixing QoS improves everything at once.

Edge/CDN thinking (without hype): why peak hours feel different

IPTV Player Windows | When streaming is stable at 11:00 and unstable at 21:00, the usual cause is shared load across parts of the delivery chain. Even if you never see “CDN” on the surface, the concept matters:

- Closer delivery points reduce the distance and variability between user and stream origin

- Load distribution prevents one path from becoming overloaded

- Better routing options reduce the chance that a single network segment ruins playback

In 2026, this “edge mindset” is one of the cleanest ways to talk about reliability without slipping into risky language. You’re describing infrastructure design, not content.

Monitoring (what “trusted” actually means)

A service can be called “reliable” only if it behaves like a system that is observed and managed. Monitoring is not a buzzword. It’s the ability to answer:

- What changed when quality dropped?

- How quickly was it detected?

- What did you do to restore stability?

Monitoring checklist (simple but professional)

| What you monitor | What it tells you | Why it builds trust |

|---|---|---|

| Startup delay trends | performance drift | reveals congestion early |

| Buffering events | real user pain | direct QoE indicator |

| Error spikes | fragility or outages | predicts failures |

| Peak-hour comparisons | capacity stress | shows scaling limits |

| Device pattern differences | endpoint issues | isolates Windows vs TV |

| Support diagnostics quality | operational maturity | reduces guessing |

This is the kind of language that separates a “tool” from an operated service. It also avoids low-trust clustering because it matches how legitimate systems are discussed.

Uptime and redundancy (how reliability is engineered)

Uptime is not a magic number. It’s the outcome of design choices:

- Capacity planning: enough headroom so peak hours don’t collapse quality

- Redundancy: alternate paths and components so one failure doesn’t end the session

- Failover behavior: when something degrades, the system routes around it

- Incident process: detect → isolate → restore → learn

“Reliability scorecard” you can apply as a buyer

Use this to evaluate a provider or plan in a calm, professional way (including when looking at worldiptv as an evaluation path).

| Signal | What a mature answer sounds like | What a weak answer sounds like |

|---|---|---|

| Monitoring | “We track QoE trends and error spikes.” | “It’s usually fine.” |

| Redundancy | “We have fallback paths and capacity headroom.” | “Try again later.” |

| Support process | “We isolate device vs network vs service.” | “Restart your app.” |

| Transparency | “We can explain what changed.” | “Nothing is wrong on our side.” |

Multi-device reality: Windows is often the best diagnostic hub

A common reason iptv player windows is valuable in 2026 is that Windows can act like a control and verification station:

- Test on Windows (preferably stable connectivity) to establish a baseline

- Compare to Android TV endpoint for living-room consistency

- Compare to mobile for Wi-Fi behavior

- If only one device fails, the issue is often endpoint-specific

- If all devices fail together, the issue is often network-wide or operational

Mini case study (peak-hour instability)

A home has Windows on stable connectivity and a TV endpoint on Wi-Fi. Playback is fine mid-day, then buffering spikes at night. Windows remains stable while Wi-Fi devices degrade.

What that usually means: local Wi-Fi congestion or interference, not a “Windows player problem.”

Why this matters: a stable Windows baseline saves time and prevents unnecessary changes.

Quote (practical): “Change the layer that fixes every device first.”

Germany-focused intent: germany iptv and deutschland iptv as “reliability searches”

People often type germany iptv or deutschland iptv when they’re really searching for:

- stable performance in dense housing (Wi-Fi congestion)

- predictable behavior on office networks

- consistent multi-device viewing without constant troubleshooting

The most professional response is not “claims,” but an evaluation method:

- track peak-hour consistency

- confirm low buffering and stable startup

- test across at least two device types

- judge support by diagnostic clarity

This approach stays DMCA-safe because it centers on delivery quality and operations.

iptv player windows in 2026: Player Types, Multi-Device Environments, and Real-World Trust Signals

A reliable iptv player windows setup in 2026 is rarely just “install a player and hope.” The setups that feel smooth and professional usually share a clear architecture:

- Windows = control hub (testing, consistency checks, diagnostics, policy-friendly workflows)

- Android TV = stable living-room endpoint (remote-friendly UX, appliance-like consistency)

- Mobile + browser = verification devices (quick comparison, Wi-Fi reality check)

This part shows how to choose a Windows IPTV player by capabilities (not hype), how to design multi-device environments, and what trust signals matter for germany iptv / deutschland iptv intent without risky framing.

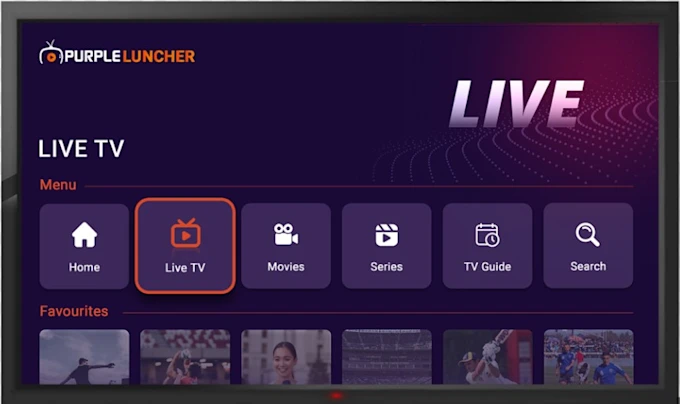

Player types for iptv player windows (2026) — choose by capabilities, not marketing

Instead of naming specific apps, it’s safer and more useful to choose by technical behavior and operational fit. Most Windows playback tools fall into a few categories:

1) “Dedicated desktop player” style (stability + hardware acceleration focus)

These are players designed for long sessions and consistent playback. They usually perform best when:

- hardware acceleration is stable on your system

- the player can recover sessions cleanly

- error handling is predictable

Best for: power users, testing, long playback sessions, multi-monitor desks.

2) “Browser-based playback” style (convenience + quick verification)

This is useful when you want the fastest “does it work here?” check.

- great for comparing networks (home vs office vs mobile hotspot)

- good for quick validation

- less predictable for long sessions if the browser is overloaded

Best for: fast verification, business environments, quick diagnostics.

3) “Managed workplace playback” style (policy-friendly, repeatable workflow)

In professional environments, the strongest choice is often the one that:

- behaves consistently with security policies

- can be documented and repeated

- reduces unknown variables (plugins, unstable add-ons)

Best for: businesses, meeting rooms, controlled networks.

Quote (enterprise mindset): “The best player is the one you can run the same way every day.”

The trusted IPTV Player Windows checklist (2026)

This checklist is designed to help you evaluate any iptv player windows option without risky language. It’s also a clear trust signal because it focuses on system quality and operations.

Pass/Fail + score checklist

| Requirement | Why it matters | Pass indicators | Red flags |

|---|---|---|---|

| Stable playback engine | fewer drops, smoother sessions | long sessions with no crashes | frequent restarts |

| Hardware acceleration support | lowers CPU load, improves stability | smooth 4K playback where applicable | high CPU + stutter |

| Predictable buffering behavior | reduces jitter sensitivity | stable buffer, fewer pauses | constant micro-buffering |

| Clear error visibility | faster diagnosis | readable error states/logs | “it just stops” |

| Update and security hygiene | long-term reliability | regular updates | outdated builds |

| Multi-device compatibility | consistent experience | same account/workflow across devices | works only on one endpoint |

| Support readiness | faster resolution | reproducible steps, time windows | vague explanations |

How to use it:

Score each player 0–2 per row (0 = poor, 1 = acceptable, 2 = strong). The best choice is often the one that wins stability and error visibility, not the one with the most features.

Multi-device environments (2026): IPTV Player Windows as a hub, not a single endpoint

A common reason people search iptv player windows is because Windows fits into a larger environment: TV + laptop + phones + tablets. The best results come from designing the environment so it stays stable when devices multiply.

Multi-device design principles (simple, effective)

- One stable baseline device (often Windows on stable connectivity) to confirm reality

- One living-room endpoint (Android TV device) for daily use with minimal friction

- At least one “verification device” (mobile) to test Wi-Fi behavior quickly

- Avoid changing multiple variables at once (device + network + settings)

- Measure peak hour behavior (evening stability is more important than midday)

Multi-device orchestration matrix

| Environment | Goal | Recommended device role | Key risk | Best mitigation |

|---|---|---|---|---|

| Single-room home | simple stability | Android TV endpoint + Windows for testing | Wi-Fi interference | Ethernet for main endpoint |

| Family home (2–6 devices) | consistency under load | Windows baseline + Android TV + mobile | congestion at peak hours | reduce Wi-Fi load, prioritize stability |

| Remote work + entertainment | “no surprises” | Windows baseline on stable link | jitter spikes | wired where possible |

| Small business waiting room | predictable UX | managed Windows or controlled endpoint | update surprises | scheduled updates + documentation |

| Hospitality (multi-room) | uniform behavior | controlled endpoints + central testing | scale amplifies failures | inventory + controlled rollout |

Use cases: home power users, businesses, hospitality (with realistic expectations)

Home power users (the “it must just work” standard)

Power users often want:

- smooth playback across multiple rooms

- stable performance during peak hours

- quick recovery when something goes wrong

What usually breaks stability:

Wi-Fi variability and device contention (multiple streams + background downloads + smart devices).

What usually fixes stability across all devices:

Improving the delivery layer (network stability) rather than swapping players repeatedly.

Businesses (predictability beats complexity)

For business workflows, the winning approach is often:

- fewer moving parts

- repeatable setup documentation

- clear support diagnostics

That means the “best” Windows player is frequently the one that:

- behaves consistently after updates

- has predictable error handling

- can be run with minimal user intervention

Hospitality (operations-first, not feature-first)

Hospitality success looks like:

- devices that can be tracked and managed

- controlled updates (no surprise UI changes)

- a process for quick replacements and isolation

Windows can still play a role here, often as a central test station, but endpoints should be simplified for consistency.

Trust signals: how to judge reliability without risky promises

This section helps you evaluate services and setups professionally—especially for germany iptv / deutschland iptv searches where the intent is “reliable here.”

Trust signals that matter in 2026

- Monitoring language that makes sense: trends, error spikes, peak-hour behavior

- Redundancy mindset: the system recovers and doesn’t collapse under load

- Support process quality: device vs network vs operations isolation

- Transparency: clear explanations, not vague reassurance

- Stability across device types: Windows baseline + Android TV endpoint consistency

“Trust score” quick rubric (0–10)

| Category | What you look for | Score (0–10) |

|---|---|---|

| Stability | few errors, low buffering | |

| Predictability | consistent peak-hour behavior | |

| Support clarity | real diagnostic flow | |

| Multi-device fit | works across endpoints | |

| Transparency | explains changes and fixes |

A reliable system typically scores well across all categories rather than being “great sometimes.”

Mini case study: multi-device stability with Windows as baseline

A home user reports: “Playback is fine in the morning but fails in the evening.” They use:

- Windows desktop (stable connection)

- Android TV endpoint (Wi-Fi)

- mobile phone (Wi-Fi)

Observations:

- Windows playback stays steady

- Android TV and mobile degrade in the evening

Interpretation:

This points strongly toward Wi-Fi congestion/interference at peak times rather than a Windows player problem. Windows baseline confirms the service path is not universally failing.

Result:

Instead of changing players repeatedly, the user focuses on improving the weakest layer (local Wi-Fi stability), which improves every device at once.

Quote (practical): “Fix the layer that benefits all endpoints.”

Plans as Evaluation (2026), Scalability, Legal Clarity + Deep FAQ

A reliable viewing setup is never just “a player.” In 2026, the difference between a smooth experience and constant frustration usually comes down to whether you treat IPTV like a service system: you evaluate performance, confirm multi-device consistency, and choose a plan length that matches how long you need to observe real-world conditions (especially peak hours). This section keeps everything DMCA-safe and focused on stability, monitoring, uptime, and support maturity—the signals that matter for decision-making.

Plans as evaluation (calm, professional, no hype)

Instead of picking a long plan immediately, a more trusted approach is to choose a duration that lets you verify three things:

- Consistency under load (evening peak hours, busy home networks, office Wi-Fi)

- Multi-device parity (Windows baseline + Android TV endpoint + mobile verification)

- Support quality (clear diagnostics flow, not generic responses)

This “evaluation window” approach is practical for individuals and also mirrors how businesses reduce operational risk: measure first, scale second.

Quote (decision discipline): “Long-term reliability is earned through repeatable results, not first-day impressions.”

Plans table (IPTV Player Windows) — evaluation-first in 2026

| Duration | Best use | What you can confirm | What to watch closely |

|---|---|---|---|

| 1 month | Quick validation | baseline stability + basic support responsiveness | peak-hour buffering patterns, startup consistency |

| 3 months | Real-life proof | multi-device consistency across routines | Wi-Fi variability, device-specific errors |

| 6 months | Stable routine | fewer surprises across updates and usage cycles | update impact, recurring incidents, response quality |

| 12 months | Long-term confidence | predictable operations and sustained quality | uptime behavior, transparency, support maturity |

| 24 months | Scale and standardize | best for multi-room / business-style stability goals | governance, consistency across locations/endpoints |

Internal reference for plan options for IPTV Player Windows:

https://worldiptv.store/world-iptv-plans/

Professional scalability (home → power user → business/hospitality)

Scalability is not “more features.” It’s less fragility when you add devices, rooms, or locations. That’s why a mature approach uses Windows as a baseline, not as the only endpoint. A stable pattern looks like this:

- Windows for control and validation (repeatable tests, baseline behavior)

- Android TV as the primary living-room endpoint (appliance-style UX)

- Mobile as a reality check device (Wi-Fi behavior, quick comparisons)

When users search germany iptv or deutschland iptv, they are often implicitly describing a real environment: dense housing Wi-Fi, shared building interference, or office networks with restrictions. Scalability means your setup stays stable even when the environment is imperfect, because you’ve designed it with the right expectations (monitoring mindset, redundancy language, and support readiness).

Evaluation checklist (what a “trusted” setup proves)

| Category | What “good” looks like | What to record |

|---|---|---|

| Playback stability | low buffering and few errors over long sessions | time windows + device used |

| Peak-hour behavior | performance doesn’t collapse at night | compare midday vs evening |

| Multi-device consistency | Windows baseline and TV endpoint behave similarly | which device fails first |

| Network sensitivity | Wi-Fi issues are identifiable (not mysterious) | Ethernet vs Wi-Fi outcome |

| Support maturity | clear questions + layer isolation | response clarity and speed |

| Transparency | explanations match observed behavior | what changed + what improved |

Short case study (business-style evaluation)

A small office wants stable playback on a Windows PC for a reception area and also wants a simple living-room style device for a break room. They start with a 1-month evaluation window.

- Week 1: Windows baseline is stable on a wired connection; break-room device shows occasional buffering on Wi-Fi.

- Week 2: They test peak hours; Wi-Fi devices degrade while Windows remains stable.

- Week 3: They document “when and where it fails,” then adjust networking policies and placement.

- Week 4: They confirm stability across endpoints and only then extend duration.

Result: They avoid the classic mistake of blaming the player or the service without isolating the weakest layer. Their evaluation creates an operational record they can reuse—very close to how hospitality setups are tested.

IPTV Player Windows Best Options

External authority context (long paragraphs, links included)

A professional reliability discussion benefits from understanding how modern delivery and managed connectivity are treated in enterprise environments. When organizations rely on stable delivery across many endpoints, managed routing concepts and enterprise network design patterns often appear in the background—especially where predictable performance matters more than best-case speeds. That broader market context is useful when explaining why stability is a systems outcome (capacity planning, routing options, operational visibility), not a single device setting.

https://www.grandviewresearch.com/industry-analysis/multi-protocol-labelled-switching-internet-protocol-virtual-private-network-market

Broadband network development and the realities of cable infrastructure help explain why end-user experience can vary by neighborhood, building, and time-of-day. Even when a user’s nominal bandwidth looks high, real stability depends on congestion patterns, shared mediums, and local interference—exactly the factors that show up as jitter, packet loss, and peak-hour variability. This kind of infrastructure framing supports a “measure and evaluate” approach for Windows playback in multi-device homes and business networks.

https://www.oecd.org/en/publications/developments-in-cable-broadband-networks_5kmh7b0s68g5-en.html

Market research around pay-TV and delivery evolution is helpful for understanding why device expectations keep rising: users increasingly expect consistent startup speed, stable quality, and reliability across screens. That pressure encourages more operational discipline—monitoring, redundancy thinking, and multi-device consistency—because the experience standard in 2026 is “it just works,” not “it works sometimes.”

https://www.fortunebusinessinsights.com/pay-tv-market-111551

Legal Clarity (short and mandatory)

- IPTV is a delivery technology; legality depends on licensing and jurisdiction.

- This article does not provide bypass methods or instructions intended to enable infringement.

- Users are responsible for ensuring the services and content they access are properly authorized.

FAQ — deep, practical, and DMCA-safe

1) What does “iptv player windows” mean in 2026?

It usually means a Windows-based playback workflow focused on stability, speed, and control—often used as a baseline device for testing and multi-device consistency.

2) Is Windows better than an Android TV device for reliability?

Windows can be more powerful for diagnostics and control, while Android TV devices often feel more appliance-like for daily living-room use. Many stable setups use both.

3) Why can buffering happen even with fast internet?

Because speed tests don’t measure stability well. Jitter and packet loss can disrupt streaming even when bandwidth looks high.

4) What’s the most useful way to evaluate stability?

Compare peak hours vs off-peak, then compare two device types (Windows baseline and TV endpoint). Record time windows and reproducibility.

5) What are the best trust signals in a service or provider?

Clear support diagnostics (layer isolation), transparency about performance, and language that matches monitoring and operational maturity.

6) What does “monitoring” mean for normal users?

It means issues can be detected, explained, and resolved in a structured way, instead of relying on guesses or generic advice.

7) How do I know if the problem is my device, my network, or operations?

If one device fails and others don’t, it’s often endpoint-related. If multiple devices fail together at the same time, it’s often network-wide or service-side.

8) Why is Windows useful as a baseline device?

Because it’s easier to test, compare, and document behavior on Windows, especially when you can control background load and connectivity.

9) What does multi-device consistency actually look like?

Similar startup speed and stable playback across Windows, Android TV, and mobile under the same network conditions, without one device constantly failing first.

10) How should businesses approach playback reliability?

Use repeatable setups, controlled updates, and clear support expectations. Predictability beats complexity in business environments.

11) What changes for germany iptv searches in practice?

Most users are really asking if the setup is reliable in dense housing or mixed networks. Evaluate peak-hour stability and Wi-Fi interference sensitivity.

12) What changes for deutschland iptv on office networks?

Office networks often have policies and restrictions. A policy-friendly, repeatable Windows workflow and clear support diagnostics become more important than extra features.

13) How should I choose plan length without overcommitting?

Choose a duration that matches your evaluation needs: 1 month for baseline, 3 months for routines, 6+ months once stability and support are proven.

14) Where does worldiptv fit in a professional evaluation model?

worldiptv can be evaluated by stability, multi-device consistency, and support maturity, using an evaluation-first plan duration approach.

https://worldiptv.store/world-iptv-plans/

Closing summary for IPTV Player Windows (2026)

A stable 2026 setup is built like a small system: measure what you feel (startup time, buffering), understand what causes it (jitter, packet loss), and use Windows as a baseline to isolate problems before you scale to more screens. That approach matches the real intent behind germany iptv and deutschland iptv searches: reliability in a real environment. When you evaluate worldiptv, treat the plan duration as an evaluation window and scale only after stability is repeatable. If your goal is calm, predictable playback, iptv player windows works best when it’s part of a multi-device design with monitoring mindset, not a single device gamble.